BACKGROUND

Atmospheric releases of hazardous materials, either accidental or intentional, continue to pose a viable threat to both United States citizens, as well as troops abroad and at home. To counter this threat, RAL is actively supporting research and the development of novel techniques and systems which can be used to more accurately simulate the atmospheric state and evolution of the released material in both time and space, for planning, real-time response, and forensic purposes.

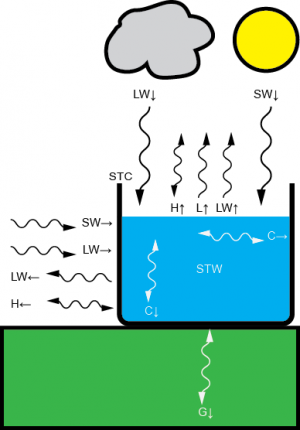

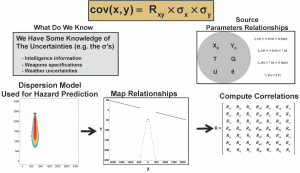

Figure 1

HAZARDOUS MATERIAL SOURCE TERM ESTIMATION

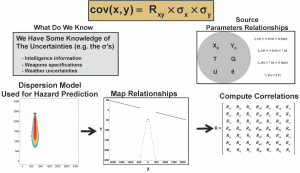

In addition to needing a representative description of the atmospheric state (past, present, and future), Atmospheric Transport and Dispersion (AT&D) modeling systems also require precise specifications of the material release characteristics (e.g. location, time, quantity). For most real-time response scenarios, the specifics of the material release will be unknown, with only ancillary concentration sensor measurements available.

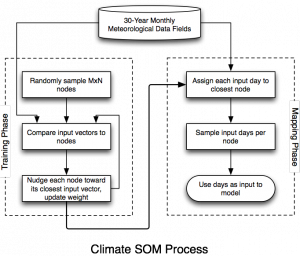

Algorithms and techniques to characterize the source and material are actively being developed at RAL to quickly reconstruct and estimate the source release using these limited sensor observations. In particular, RAL is actively developing a tailored Source Term Estimation (STE) and hazard refinement system, called the Variational Iterative Refinement STE Algorithm (VIRSA). VIRSA is a combination of models that include: the Second-order Closure Integrated PUFF model (SCIPUFF), its corresponding STE model, a hybrid Lagrangian-Eulerian Plume Model (LEPM), its formal numerical adjoint, and the software infrastructure necessary to link them (Fig. 1). SCIPUFF and its internal STE model are used to calculate a “first guess” source estimate based on available hazardous material sensor observations and meteorological observations. The LEPM and corresponding adjoint are then used to iteratively refine the "first guess" source and wind estimate using variational minimization techniques.

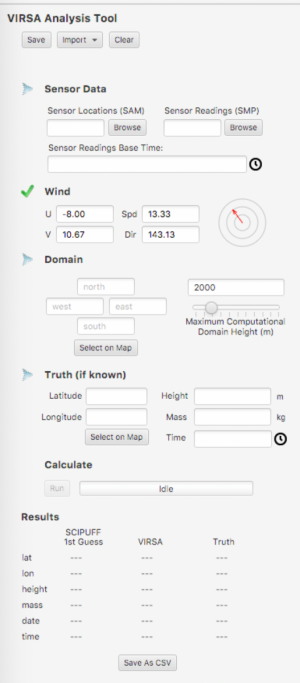

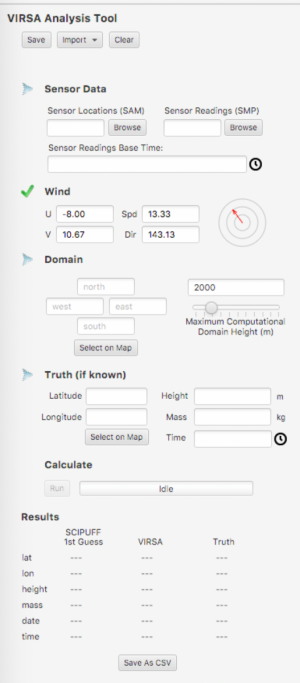

Figure 2. An example of the graphical interface

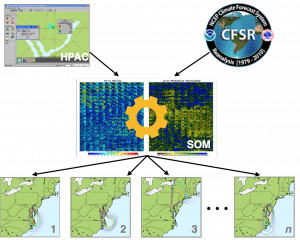

Version 1.0 of this system was successfully integrated into the US Department of Defense (DoD) emergency response modeling systems—HPAC (Hazard Prediction and Assessment Capability) and JEM (Joint Effects Model) in FY2012 (Fig. 2 illustrates an example of the graphical interface). This version of VIRSA includes the capability to refine the "first guess" source location, mass, and release time utilizing material sensor observations and meteorological observations. A stand-alone version that refines the first guess using the LEPM and its formal numerical adjoint was delivered to DTRA in FY2014.

Specific accomplishments and plans for next fiscal year are summarized below.

ACCOMPLISHMENTS

PLANS

- Integrating the VIRSA software within the HPAC framework.

- Start the exploration of VIRSA integration within the Joint Effect Models (JEM).

- Add urban capability to VIRSA. We plan on using the Urban Dispersion Model (UDM) and the reverse UDM developed at the Defence Science and Technology Laboratory (Dstl) to upgrade our current VIRSA capabilities.